Installation and use

Convolutional Pose Machines (CPMs) are multi-stage convolutional neural networks for estimating articulated poses, like human pose estimation. In this repo, a tool for live testing CPMs is provided. We currently host two implementations based on different frameworks:

- Caffe implementation (official repo).

- TensorFlow implementation.

Dependencies

- JdeRobot (installation guide)

- TensorFlow (installation guide)

- Caffe (recommended installation guide)

Other dependencies like Numpy, PyQT or OpenCV should be automatically installed along with the previous ones. By the way, if you install TensorFlow and/or Caffe with GPU support, the code provided in this repo will take advantage of it to get closer to real-time estimation (we’re almost there).

Usage

In order to test CPMs with live video feed, we have built humanpose component within the framework of

JdeRobot, a middleware that provides several tools and drivers for robotics and computer vision tasks.

Once all the dependencies have been installed and the repo has been cloned, download the trained Caffe and TensorFlow models (provided by their authors):

chmod +x get_models.sh

./get_models.sh

You can choose different video sources to feed the CPM (ROS, JdeRobot, local webcam, video file). The video source, as

well as other settings can be modified from the humanpose.yml file. When you have set your desired

parameters, from another terminal run:

python humanpose.py humanpose.yml

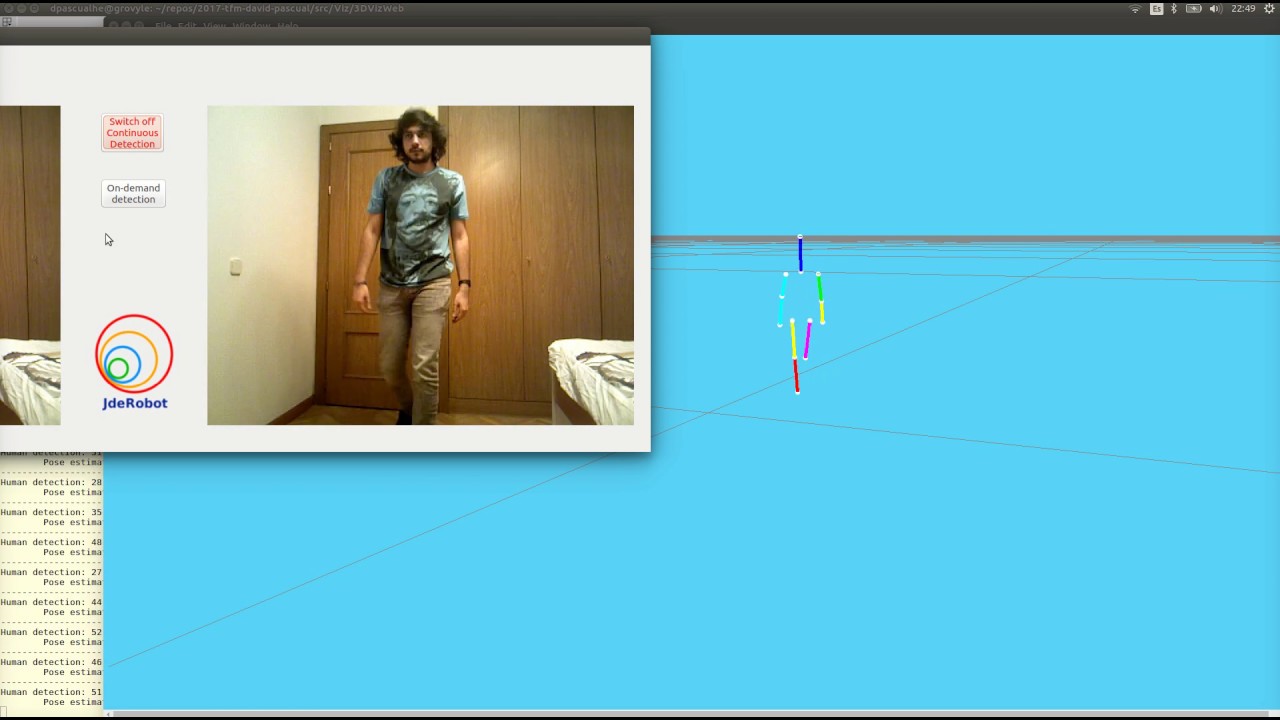

This will launch a GUI where live video and estimated poses are shown.

3D Visualization

RGBD camera can be used in order to get the pose 3D coordinates. 3DVizWeb, developed within JdeRobot, allow us to project the estimated joints and limbs in 3D. If you want to use this feature, you must follow the instructions available here and run:

cd Viz/3DVizWeb/

npm start

right after launching humanpose.

Demo

Click in the image below to watch a real-time demo:

The input data for this demo is available as a rosbag file, containing the registered depth map and color images.