FJPF TFM SD-SLAM in Android and OpenGL

Project maintained by RoboticsLabURJC Hosted on GitHub Pages — Theme by mattgraham

Table of contents

- Week 1 - Testing SD-SLAM and first mobile app

- Weeks 2-5 - Testing GLESv2 AR and SD-SLAM in a mobile app

- Weeks 6-8 - Integrating SD-SLAM and GLESv2 AR together

- Weeks 9-11 - Improving the AR app

- Weeks 12-13 - Pattern initialization and resolution reduction

- Weeks 14-18 - Adding some new features

- Weeks 19-24 - Solving problems: SelfRecorder, CamTrail and Arrows

- Weeks 25-31 - Inverse and scale drift

- Weeks 32-33 - Going deep in scale drift

- Week 34 - Bad camera location error finded

References

The contents of android-AccelerometerPlay are from https://github.com/googlesamples/android-AccelerometerPlay/.

Weeks 34

I have done some test doing only some pure rotations and pure translations (rotate along the x-axis, translate along the x-axis and so on) to try to find what is failing. In the next video we can see the results.

When i rotate the camera along the z-axis (the axis that goes from the screen to my eyes) the render goes crazy. It start to move from where it was very heavily, so the problem seems to be related to the z-axis. From here, i think i need to revisitate the projection matrix of the camera, since i don’t think that the readings from SD-SLAM are being bad and the render is stationary.

Also, i added a number in the left-up corner with the numbers of keypoints in the actual frame. It respect the colors of the state of the tracker.

Weeks 32-33

I tryed to figure it out what is exactly the problem that we saw the last time. It seems to be a scale drift but doing some test i found this:

Like we can see there is still some issues with the projection. I’m using the same instrinsic parameters for SD-SLAM and GLES2 so this problem is doing that SD-SLAM got a bad mapping of the envioriment.

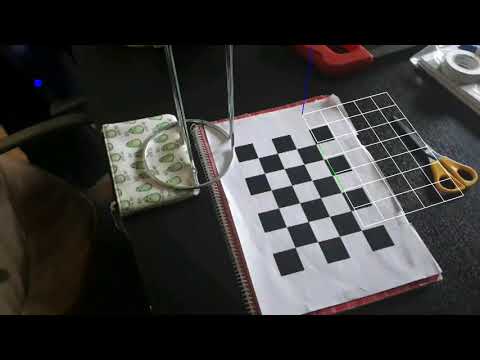

I have also recorded a video trying to trying to match the AR grid and the pattern:

Weeks 25-31

In this time i solved the problem with the left handed coordinate system and also the localization of the AR renders appears to be the best.

After trying a lot of things, finally things ended up “clacking” in my head and I managed to determine the problem of the mysterious symbol: it only consisted of playing with the inverse of the pose. With that solved i can finally move forward. In the next video we can see how the application behaves once solved the above.

We can see that the render starts in the upper left corner of the checkerboard at the bottom right of the box. The goal es keep the render in that place but I don’t get it. So i tryed again with a new mobile (after proper calibration):

Again, it seems like there is some scale drift or bad tracking and the render move from the calculated place. I will need to explore if this is a problem of the only-visual autolocalization or there is a problem with my code.

Weeks 19-24

It’s been a while since my last report. In this time, i found some problems with the functionalities implemented in the program.

-

SelfRecorder: Due to the characteristics of the device in which I do the tests, make a recording of what the app shows becomes very tedious, due to the consumption of resources that the app + self recordings do. So I’ve created a half way method that stores the trajectory followed during a test. With that I intend to alleviate the overload and still have something to show.

-

CamTrail: Until now the camera trail was static, every time the ACUTAL pose was modified, its position was saved in a list that was later rendered with GLES. This presented some problems when using it, often creating errors in how it was represented. I have modified this, so that what is rendered is the positions of the KeyFrames collected by SD-SLAM. This will cause this trail to be dynamic and change according to the information collected by SD-SLAM and making it more precise.

-

Arrows: I thought I was done fighting with this functionality. Nothing further. It seems that it still had problems in locating exactly where to represent the arrows and their direction.

In the near future, i also will be working on including the IMU sensor values to help SD-SLAM with the localization task.

Weeks 14-18

Since my last update, i did a little changes in the code to clean the draw functions, creating 2 new classes for this task (CoordsObject and InitShaders). I also increased the resolution of the openGL renders, since it seems to don’t reduce the performance and it look better.

I also create an Arrow class with the purpose of try to create a path between 2 points using the arrows as directions that i must follow to go from point A to point B. I’m working currently in this features and i’m having some issues drawing more than 1 object.

Finally, i create a new class (Recorder) to record the tablet screen i’m testing with. I was using an app to do this job all this time, so since i’m using it a lot, I decided to program a recorder. I need configurate some of the variables, to try increase the performance, but it works pretty well. We can se an example in the video below. Also in the video, there is an example of the relocalization of SD_SLAM.

SD-SLAM and AR in Android, Pattern initialization and resolution reduction:

Weeks 12-13

In these weeks I have changed the initialization from SD-SLAM to pattern initialization. To do this, it was necessary to create a couple of “set” functions in the SD-SLAM code so it would be easier to access the variables that choose the type of initialization as well as the size of the cells of the pattern.

In addition, I have also reduced the resolution of the images supplied to SD-SLAM which has meant an increase in program efficiency and quality. It has increased both the frame rate and the data measurement. As we can see in the following video, the rendered object no longer seems to float as much as in previous versions.

I have also created a function that renders a line as the wake of the camera positions. We can see it in the second video.

SD-SLAM and AR in Android, Pattern initialization and resolution reduction:

SD-SLAM and AR in Android, CamTrail:

Weeks 9-11

During this weeks i tried to improve the behavior of the AR camera, i calculated a plane using the points provided by SD-SLAM to put the grid of the AR object in it. I had some issues with this calculations because it seems that the Y and Z coords of SD-SLAM are in the opposite direction to the Y and Z coords of GLESv2 (the library controling the AR camera).

So i needed to rotate 180º along the x-axis and with this i made some mistakes trying to create the appropriated roation matrix, but they are all solved.

I also changed the projection matrix used by the AR camera using the calibration parameters of the camera that i am using to make the tests of the code.

And finally i included a small dot in the up left corner of the images provided by SD-SLAM to know what is the state of the tracker (green -> not initialized, blue -> ok, red -> lost) and that the tests are simpler.

The results are not yet what is expected, because de point calculated to translate the AR object seems to be floating and not close to the flat surface on which I am trying to initialize it.

In the following video we can see better what I’m trying to say.

SD-SLAM and AR in Android, second try including plane calculation and calibration parameters:

Weeks 6-8

In this period i tried to join SD-SLAM for Android and the AR program, with the objetive to use the calculations of SD-SLAM to perform the required changes in the AR program to seems that the object rendered is at a point of the space captured by the camera.

These changes consisted in using the translations and rotations provided by SD-SLAM and reflecting them in the AR camera. However, those changes didn’t reflect well the movement of the object on the screen, so i tried to get a 3D point calculated by SD-SLAM and translate the AR object to it. This also, didn’t reflect the movement well and the 3D point calculated appears out of the bounds of projection in the AR program.

Therefore, it will be necessary to make some calculations before transferring the data from SD-SLAM to the AR program. In the next video we can see the behaivor of this changes.

SD-SLAM and AR in Android, first try:

Weeks 2-5

In these weeks i have implemented my first AR program for Android and i have also installed SD-SLAM for Android. These programs have been put into different activities along with the initial program that showed an example of OpenCV use. In the future I will be modifying this program and removing these functionalities, since they are not the objective of the work, but for the moment I will keep them in case they could be of help for someone.

The AR program consists of an object rendered with OpenGL (GLESv2) over the images provided by the camera mobile. We can see the results in the following video:

An AR mobile example using GLESv2 and android:

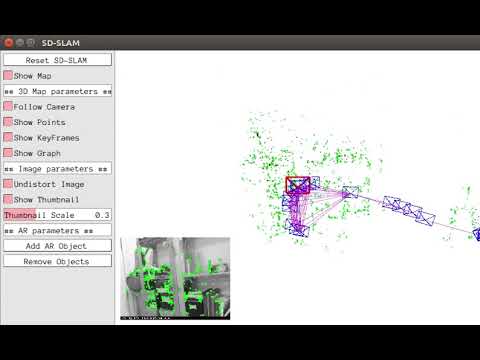

To use SD-SLAM in mobile, I have used and followed as a guide the work of Eduardo Perdices that we can find in the following link: https://gitlab.jderobot.org/slam/slam-android.

In the next video, we can see how this program works:

SD-SLAM in android:

Week 1

To start I read the work of Eduardo Perdices: “Techniques for robust visual localization of real-time robots with and without maps” (https://gsyc.urjc.es/jmplaza/pfcs/phd-eduardo_perdices-2017.pdf).

After that i installed the SD-SLAM packages and run it using my own camera to test how it worked.

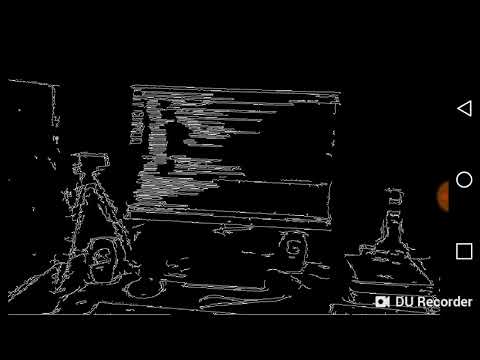

Finally, I started an Android application. As this work will use SD-SLAM + AR in a mobile environment, I started to create an application for Android that uses C code (since SD-SLAM is written in C), adding OpenCV libraries that will probably be very helpful. In the video below, we can see the results of the final app, using the canny method through the OpenCV libraries.

Canny example in android using OpenCV libraries:

SD-SLAM Test:

[ ](http://www.youtube.com/watch?v=L_nHDsnPDD0 “”)

](http://www.youtube.com/watch?v=L_nHDsnPDD0 “”)